An Intro to Using the Cloud for Post Production

Manage episode 309677208 series 3037997

On this episode of 5 THINGS, we gonna get hiiiiiigh! In the clouds, with a primer on using the cloud for all things post-production. This is going to be a monster episode, so we better get started.

1. Why use the cloud for post-production?

We in the Hollywood post industry are risk-averse. Yes, it’s true my fam, look in the mirror, and take a good hard look and realize this truism.

Take the hit.

This is mainly because folks who make a living in post-production rely on predictable timetables and airtight outcomes. Deviating from this causes a potentially missed delivery or airdate, additional costs on an already tight budget, and quite frankly more stress.

The cloud is still new-ish, and virtually all post tasks can be accomplished on-premises. So why on earth should we adopt something that we can’t see, let alone touch?

Incorporating the cloud into your workflow gives us a ton of advantages. For one, we’re not limited to the 1 or 2 computers available to us locally. This gives us what I like to call parallel creation, where we can multitask across multiple computers simultaneously. Powerful computers. I’m talking exaFlops, zettaFlops, and someday, yottaFlops of processing power….and have more flopping power than that overclocked frankenputer in your closet.

Yeah, I said it. Flopping power.

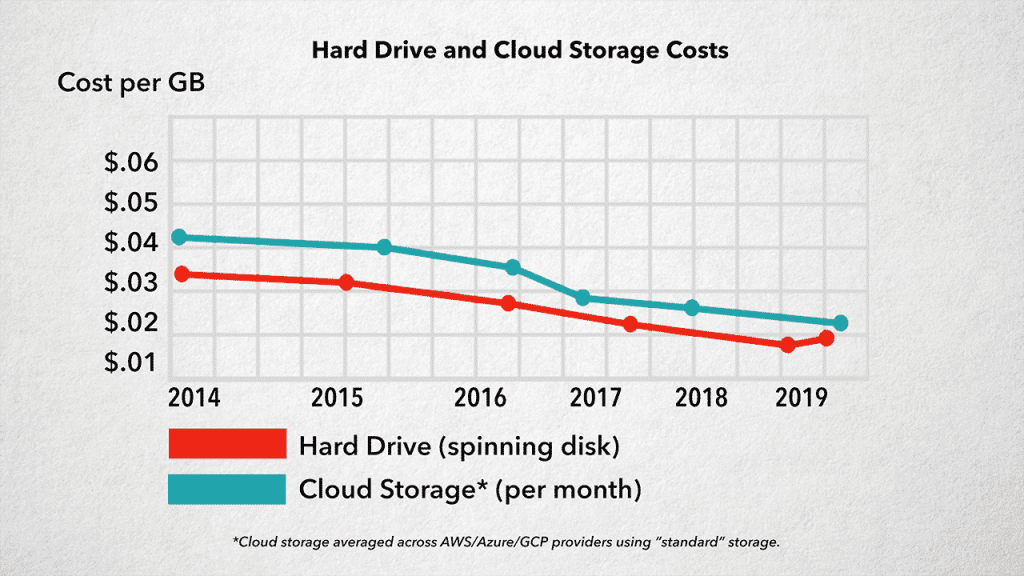

It’s also mostly affordable and getting cheaper quickly.

To be clear, I’m not telling you post-production is to be done only on-premises or only in the cloud, most workflows will always incorporate both. That being said, the cloud isn’t for everyone. If you have more time than money, well then relying on your aging local machines may be the best economical choice. If your internet connection is more 1999 than 2019, then the time spent uploading and downloading media may be prohibitive. This is one reason I’m jazzed about 5G…but that’s another episode.

Now, let’s look at some scenarios where the cloud may benefit your post-production process.

2. Transfer and Storage

Alright, let’s start small. I guarantee all of you have used some form of cloud transfer service and are storing at least something in the cloud. This can take the form of file sharing and sync applications like Dropbox, transfer sites like WeTransfer, enterprise solutions like Aspera, Signiant, or File Catalyst, or even that antiquated, nearly 50-year-old format known as FTP.

Short of sending your footage via snail mail or handcuffing it to someone while they hop on a plane, using the internet to store and transfer data is a common solution. The cloud offers numerous benefits.

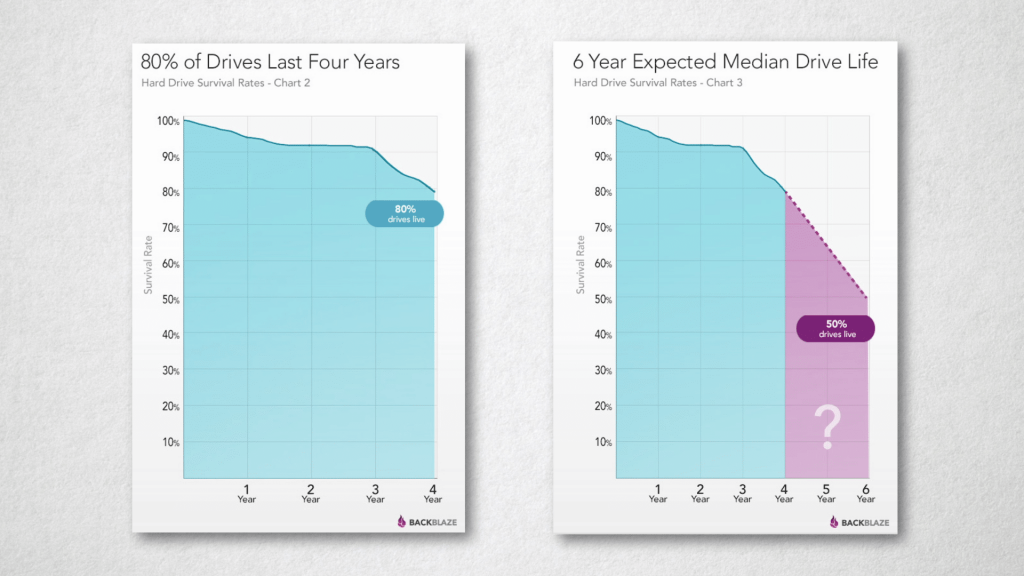

First is what we call the “five nines”, or 99.999% availability. This means that the storage in the cloud is always available and with no errors, with a max downtime of about 5 ½ minutes a year. In the cloud, five-nines are often considered the bare minimum. Companies like Backblaze claim eleven nines. This is considerably more robust than say, that aging spinning disk you have sitting on your shelf. In fact, almost a quarter of all spinning hard drives fail in their first 4 years.

Ouch.

I completely get the fact that the subscription or “rental” models are a highly divisive subject, and at the end of the day, that’s what the cloud storage model is. But you can’t deny that the cost that you get to spread out over years (also known as Opex, or operating expenditure budget) is a bit more flexible and robust than the one time buy out of storage (known as CapEx, or capital expenditure budget).

Which brings us to the next point, which is “what are the differences between the various cloud storage options?” Well, that deserves its own 5 THINGS episode, but the 2 main points to know is that the pricing model covers “availability”, or how quickly you can access the storage and read and write from it, and throughput, or how fast you can upload and download to it. Slower storage is cheaper, and normal internet upload and download speeds are in line with what the storage can provide. Fast storage, that is, storage that gives you Gigabits per second for cloud editing with high IOPs can be several hundred dollars a month per useable TB.

This is why cloud storage is often used as a transfer medium, or as a backup or archive solution rather than a real-time editing platform. However, with the move to more cloud-based applications, the need for faster storage will be necessary. With private clouds and data lakes popping up all over, the cost for cloud storage will continue to drop, much like the hard drives cost per TB has dropped over the past several years.

Cloud storage also has the added benefit of allowing work outside of your office and collaborating in real-time without having to be within the 4 walls of your company. Often, high-end firewalls and security, are, well, highly-priced, and your company may not have that infrastructure…or the I.T. talent to take on such an endeavor. Relying on the cloud for that security is built into your monthly price. Plus, most security breaches or hacks are due to human error or social engineering, not a fault in the security itself. Cloud storage also abstracts the physical location of your stored content from your business, making unauthorized access and physical attacks that much harder.

3. Rendering and Transcoding and VFX

The next logical step in utilizing cloud resources is to offload the heavy lifting of your project that requires Flopping Power.

The smart folks working in animation and VFX have been doing this for years. Rendering 100,000 frames (about an hour’s worth of material, depending on your frame rate) across hundreds or thousands of processors is gonna be finished much faster than across the handful of processors you have locally. It’s also a hellova lot cheaper to spin up machines as needed in the cloud then buying the horsepower outright for your suite.

Before you begin, you need to determine what you’re creating your models in and if cloud rendering is even an option. Typical creative environments that support cloud rendering workflows include tools like 3DS Max, Maya, Houdini, among others.

Next is identifying the CSP – cloud service provider –in this case, the big 3: Microsoft Azure, Amazon Web Services, or Google Cloud that supports a render farm in the cloud.

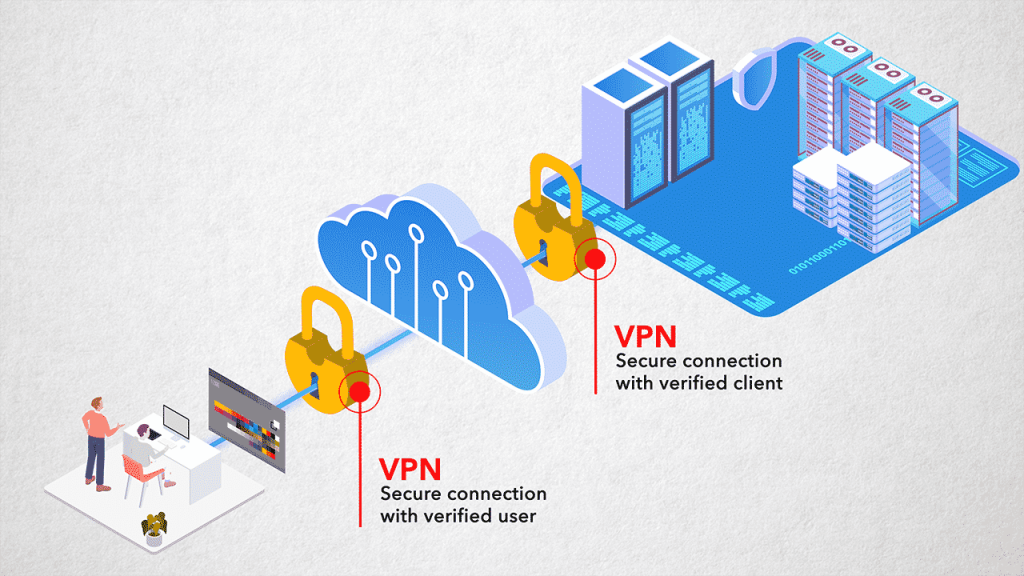

Once you have your CSP selected, a user establishes a secure connection to that CSP, usually via a VPN, or virtual private network. A VPN adds an encrypted layer of security between your machine and the CSP. It allows provides a direct pipe to send and receive data to your local machines and your CSP.

Once you have your CSP selected, a user establishes a secure connection to that CSP, usually via a VPN, or virtual private network. A VPN adds an encrypted layer of security between your machine and the CSP. It allows provides a direct pipe to send and receive data to your local machines and your CSP.

From here, a queuing and render management software is needed. This is what schedules the renders across multiple machines and ensures each machine is getting the data it needs to crunch in the most efficient way possible, plus recombining the rendered chunks back together. Deadline and Tractor are popular options. What this software also does is orchestrate media movement between on-premises, the storage staging area before the render, and where the rendered media ends up.

Next, the render farm machines run specialized software to render your chosen sequence. This can be V-Ray, Arnold, RenderMan among many others. Once these frames are rendered and added back to the collective sequence, the file is delivered.

I know, this can get daunting, which is why productions traditionally have a VFX or Animation Pipeline Developer. They devise and optimize the workflows so costs are kept down, but the deadlines are hit.

This hybrid methodology obviously blends creation and artistry on-premises, with the heavy lifting done in the cloud. However, there is a more all-in-one solution, and that’s doing *everything* in the cloud.

The VFX artist works with a virtual machine in the cloud, which has all of the flopping power immediately available. The application and media are directly connected to the virtual machine. Companies like BeBop Technology have been doing this with apps like Blender, Maya, 3DS Max, After Effects, and more.

DISCLAIMER: I work for BeBop because I love their tech.

Transcoding, on the other hand, is a much more common way of using the horsepower of the cloud. As an example, ever seen the “processing” message on YouTube? Yeah, that’s YouTube transcoding the files you’ve uploaded to various quality formats. How this can be beneficial for you are for your deliverables. In today’s VOD landscape, creating multiple formats for various outlets is commonplace. Each VOD provider has the formats they prefer and are often not shy about rejecting your file. Don’t take it personally, often their playout and delivery systems function based on the files they receive being in a particular and exact format.

As an example, check out Netflix requirements.

The hitch here is metadata. Just using flopping power to flip the file doesn’t deliver all of the ancillary data that more and more outlets want. This can be captioning, various languages or alt angles, descriptive text, color information and more. Metadata resides in different locations within the file, whether it be an MP4, MOV, MXF, IMF, or any other the other container formats. Many outlets also ask for specialized sidecar XML files. I cannot overstate how important this metadata mapping is, and how often this is overlooked.

You may wanna check out AWS Elastic Transcoder, which makes it pretty easy to not only flip files…but also do real-time transcoding if you’re into that sorta thing. Telestream also has its Vantage software in the cloud which adds things like Quality Control and speech to text functions.

There are also specialty transcoding services, like Pixel strings by Cinnafilm for those tricky frame rate conversions, high quality retiming, and creating newer formats like IMF packaging.

4. Video Editing, Audio Editing, Finishing, and Grading

Audio and video editing, let alone audio mixing and video grading and finishing, are the holy grail for cloud computing in Post Production. Namely, because these processes require human interaction at every step. Add an edit, a keyframe, or a fader touch all require the user to have constant and repeatable communication with the creative tool. Cloud computing, if not done properly, can add unacceptable latency, as the user needs to wait for the keypress locally to be reflected remotely. This can be infuriating for creatives. A tenth of a second can mean the difference between creativity and…carnage.

There are a few ways to tackle editing when not all of the hardware, software, or media is local to you…and sometimes you can use multiple approaches together for a hybrid approach.

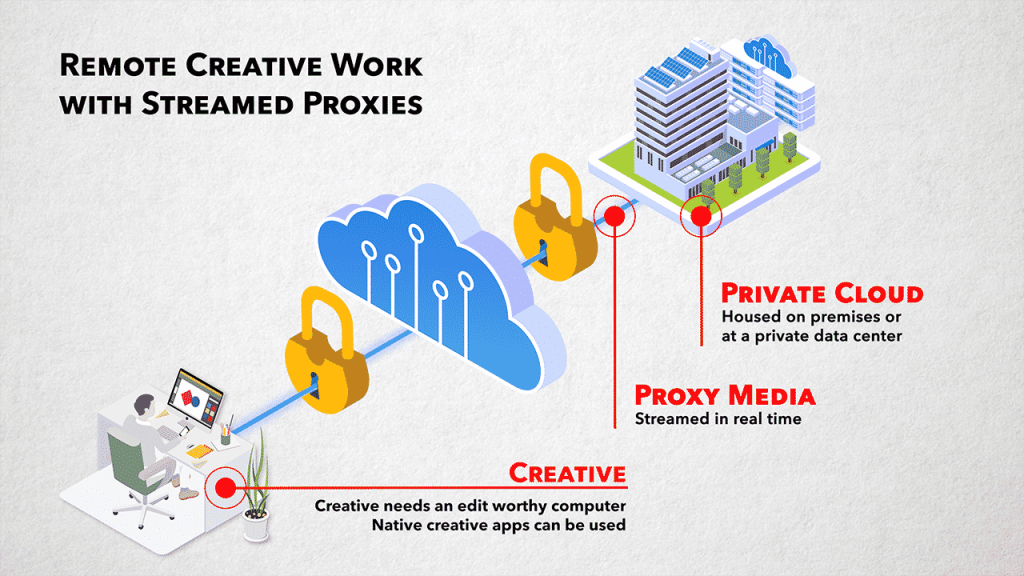

First, we have the private cloud, which can be your own little data center, serving up the media as live proxy streams to a remote creative with a typical editing machine. True remote editing.

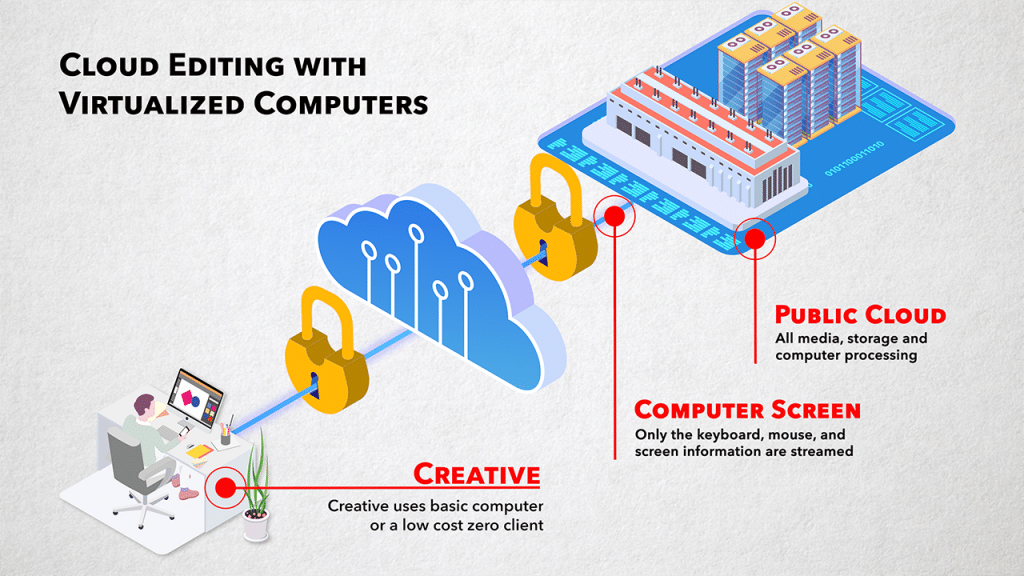

Next, have the all-in approach – have everything, and I mean absolutely everything – is virtualized in the cloud. The software application, the storage, and you access it all through a basic computer or what we call zero clients.

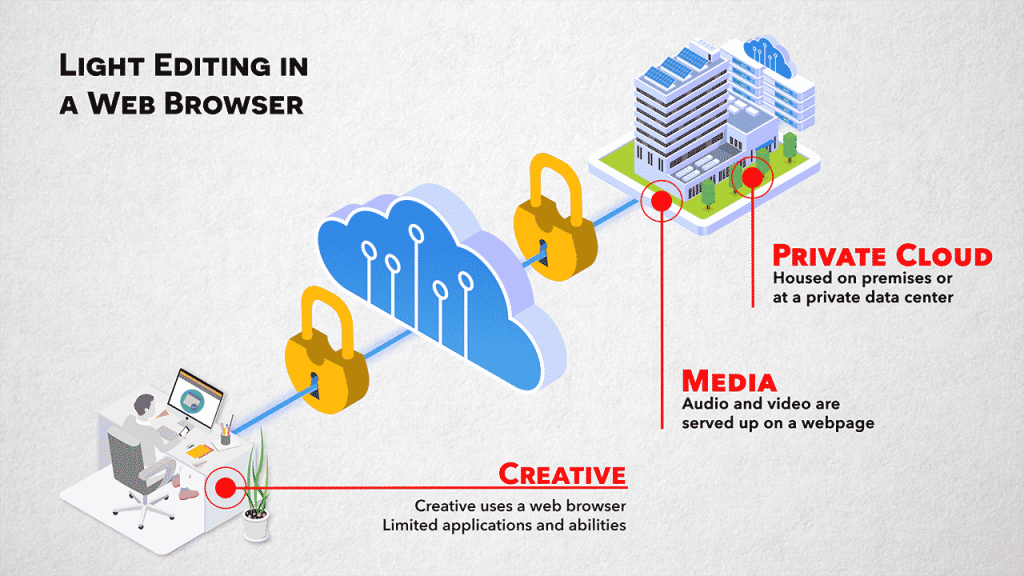

Lastly, we have a hybrid approach. Serve the media up in the cloud to a watered-down web page based editor on your local machine.

Each has its pros and cons.

Both Avid and Adobe have had versions of an on-premises server serving up proxies to remote editing systems for many years. The on-prem server – a private cloud, for all intents and purposes – serves out proxy streams of media for use natively within an Avid Media Composer or Adobe Premiere Pro system connected remotely. Adobe called it Adobe Anywhere, and today the application is…nowhere. The expensive product was shelved after a few years. Avid, however, is still doing this today, using a mix of many Avid solutions, including the product formerly known as Interplay, now called Media Central | Cloud UX, a few add on modules, along with a Media Composer | Cloud Remote license. It’s expensive, usually over $100K.

Back to Adobe, I’d be remiss if I didn’t mention 3rd party asset management systems that carry on the Adobe Anywhere approach. Solutions like VPMS Editmate from Arvato Bertelsmann, or Curator from IPV are options but are based around their enterprise asset management systems, so don’t expect the price tag to be anything but enterprise.

The all-in cloud approach, meaning your NLE and all of the supporting software tools and hardware storage – are running in a VM – a virtual machine – in a nearby data center. This brings you the best of both worlds. Your local machine is simply a window into the cloud-hosted VM, which brings you all the benefits of the cloud, presented in a familiar way – a computer desktop. And you don’t have the expensive internal infrastructure to manage. This is tricky though, as creatives need low latency, and geographical distance can be challenging if not done right.

A few companies are accomplishing this, however, using robust screenshare protocols and nearby data centers. Avid has Media Composer and NEXIS running on Azure and will be available with Avid’s new ”Edit on Demand” product. BeBop Technology, is accomplishing the same thing, but with dozens of editorial and VFX apps, including Avid and Premiere.

Disclaimer: I still work for BeBop. Because their technology is the sh&t.

Some companies have investigated a novel approach: why not let creatives work in a web browser to ensure cross-platform availability, and to work without the proprietary nature that all major NLEs inherently have? This is a gutsy approach, as most creatives prefer to work within the tools they’ve become skilled in. However, less intensive creative tasks, like string-outs or pulling selects performed by users who may not be full-time power editors is an option. Avid adds some of this functionality into their newer Editorial Management product. Another popular choice for web browser editing is Blackbird, formerly known as FORScene by Forbidden Technology. This paradigm is probably the weakest for you pro editors out there. I don’t know about you, but I want to work on the tools I’ve spent years getting better at.

Alas, Mac only based apps like Final Cut Pro X are strictly local/on-premises solutions. And while there are Mac-centric data centers, often the Apple hardware ecosystem limits configuration options compared to PC counterparts. Most Mac data centers also do not have the infrastructure to provide robust screen sharing protocols to make remote-based Apple editing worthwhile. Blackmagic’s Resolve, while having remote workflows, still requires media to be located on both the local and remote systems. This effectively eliminates any performance benefits found in the cloud.

Audio, my first love, has some way to go. While basic audio in an NLE can be accomplished with the methods I just outlined, emulating pro post audio tools can be challenging.

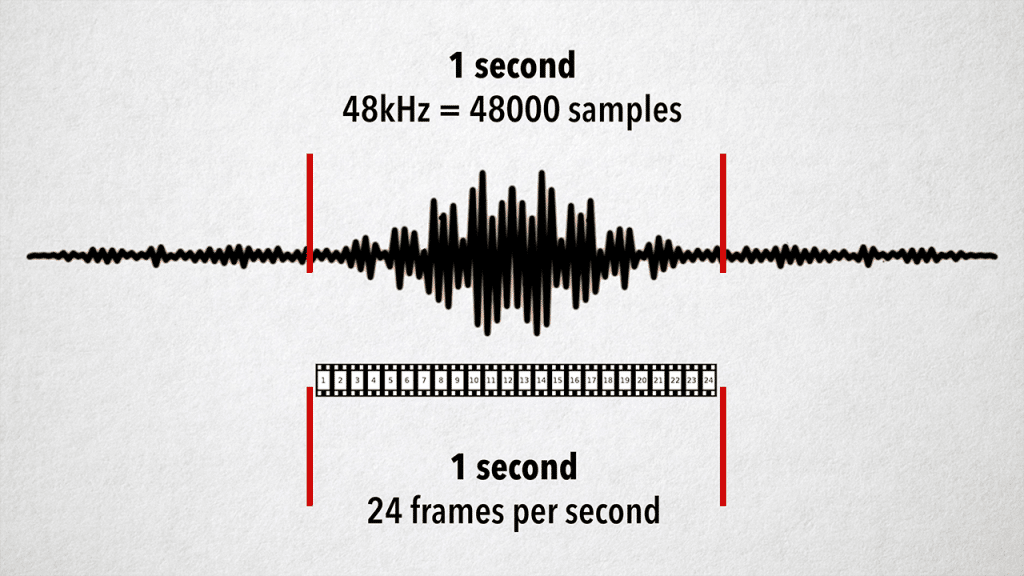

Audio is usually measured in samples. Audio sampled at 48kHz is actually 48000 individual samples a second. Compare this to 24 to 60 frames a second for video, and you can see why precision is needed when working with audio. This is one reason the big DAW companies don’t yet sanction running their apps in the cloud. Creative work with latency by remote machines at the sample level makes this a clunky and ultimately unrewarding workflow.

Pro Tool Cloud is a sorta hybrid, allowing near real-time collaboration of audio tracks and projects. However, audio processing and editing is still performed locally.

On to Finishing and Color Grading in the cloud. Often these tasks take a ton of horsepower. And you’d think the cloud would be great for that! And it will be…someday. These processes usually require the high res or the source media – not proxies. This means the high res media has to be viewed by the finishing or color grade artist. These leaves us with 1 or 2 of unacceptable conditions:

- Cloud storage that can also play the high res content is prohibitively expensive and

- There isn’t a way to transmit high res media streams in real-time to be viewed and thus graded without unacceptable visual compression.

But NDI you cry! Yes, my tech lover, we’ll cover that in another episode.

While remote grading with cloud media is not quite there, remote viewing is a bit more manageable. And we’ll cover that…now.

5. Review and Approve (and bonus!)

Review and approve is one of the greatest achievements of the internet era for post-production. Leveraging the internet and data centers to house your latest project for feedback is now commonplace. This can be something as simple as pushing to YouTube or Vimeo or shooting someone a Dropbox link.

While this has made collaboration without geographic borders possible, most solutions rely on asynchronous review and approve…that is, you push a file somewhere, someone watches it, then gives feedback.

Real-time collaboration, or synchronous review and approve – meaning the creative stakeholders are all watching the same thing and at the same time, is a bit harder to do. As I mentioned earlier, real-time, high-fidelity video streaming can cause artifacts…out of sync audio, reduced frame rates, and all of this can take the user out of the moment. This is where more expensive solutions that are more in line with video conferencing surface, popular examples include Sohonet’s Clearview Flex, Streambox, or the newer Evercast solution.

However, In this case, these tools are mostly using the cloud as a point to point transport mechanism, rather than leveraging the horsepower in the cloud.

NDI holds a great deal of promise. As I already said, we’ll cover that in another episode.

Back to the non-real time, asynchronous review and approve: The compromises with working in an asynchronous fashion are slowly being eroded away by the bells and whistles on top of the basic premise of sharing a file with someone not local to you.

Frame.io is dominating in this space, with plug-ins and extensions for access from right within your NLE, a desktop app for fast media transfers, plus their web page review and approval process which is by far the best out there

Wiredrive and Kollaborate are other options, also offering a web page review and approve options.

I’m also a big fan of having your asset management system tied into an asynchronous review and approval process. This allows permitted folks to see even more content and have any changes or notes tracked within 1 application. Many enterprise DAMs have this functionality. A favorite of mine is CatDV who has these tools built-in, as well as Akomi by North Shore Automation, which has an even slicker implementation and has the ability to run in the cloud.

As a bonus cloud tool, I’m also a big fan of Endcrawl, and online site that generates credit crawls for your projects without the traditional visual jitteriness from your NLE, and the inevitable problems of 37 credit revisions.

A heartfelt thank you to everyone who reached out via text or email or shared my last personal video. It means more than you know.

Until the next episode: learn more, do more.

Like early, share often, and don’t forget to subscribe. Thanks for watching.

36 Episoden